Maintenance windows. Let’s be honest, they suck. If you ask any network admin they will likely tell you the midnight maintenance windows are their least favorite part of the job. They are a necessity due to the very nature of what we do, which is build, operate and maintain large, complex networks, because any changes that are made can have far-reaching, and often unpredictable impact. Impact to production systems that we must avoid whenever possible. So, we schedule downtime and amp up our caffeine intake for an evening of changes and testing whatever we may have broken.

No matter how meticulous you are in your planning, no matter how well you know the subtle intricacies of your environment, something, somewhere is going to go wrong. Even if you are one of the lucky few to have a lab environment in which to test changes, it’s often not even close to the scale of your actual network.

But, what if you had a completely accurate, full-scale model of your network, and could test those changes without having to risk your production network? A break/fix playground that would allow you to vet any changes you needed to make, which would in turn, allow you the peace of mind of shorter, smoother maintenance windows, or perhaps (GASP!) no maintenance windows at all?

Go ahead, break it.

That’s what Forward Networks’ co-founders David Erickson and Brandon Heller want you to do within their Forward Platform, as they bring about a new product category they call Network Assurance:

“Reducing the complexity of networks while eliminating the human error, misconfiguration, and policy violations that lead to outages.”

At Network Field Day 13, only a few days after Forward Networks came out of stealth, we had the privilege of hearing, for the first time, exactly who and what Forward Networks was, and how their product would “accelerate an industry-wide transition toward networks with greater flexibility, agility, and automation, driven by a new generation of network control software.”

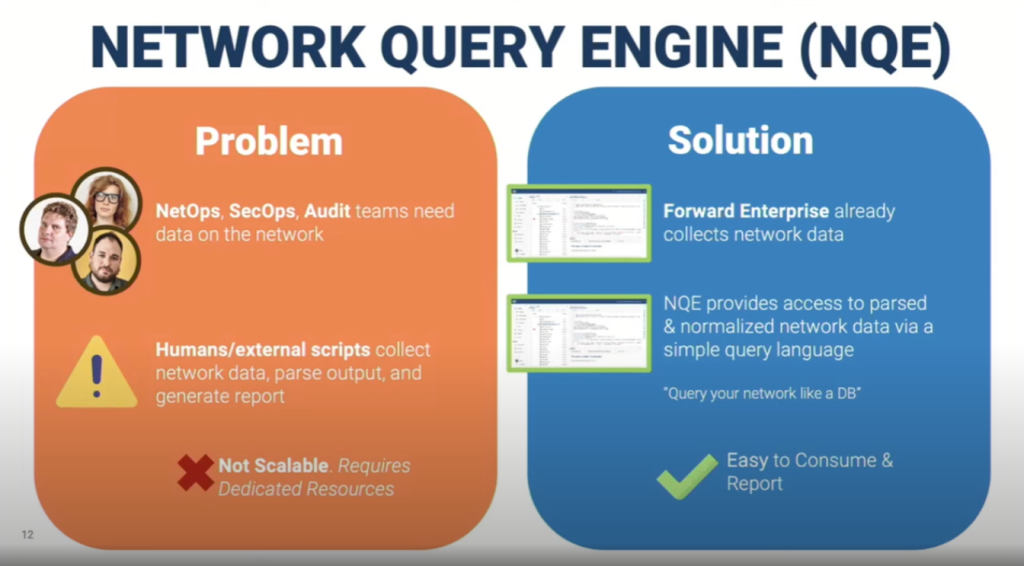

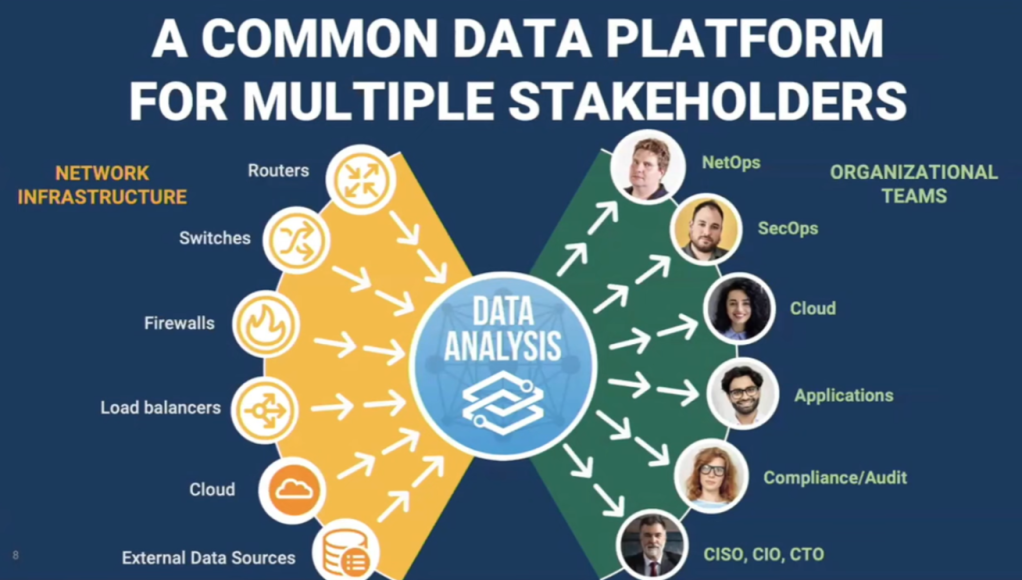

David Erickson, CEO and co-founder, spoke to how they have recognized that modern networks are complex, made up of hundreds if not thousands of devices, are often heterogeneous, and can contain millions of lines of configuration, rules, and policy. The tools we have to manage these networks are outdated (ping, traceroute, SNMP, etc.) and the time spent as a network admin going through the configuration of these devices looking for problems is overwhelming at times. As a result, a significant portion of outages in today’s networks are caused by simple human error, which has far-reaching impact to business, and brand.

This is not a simulation or emulated model of your network, but a full-scale replica, in software, that you can use to review, verify and test against, without risk to production systems. The algorithm they use claims to trace through every port in your network to determine where every possible packet could go within the network as it is presently configured. The “all packet”.

Applications

The three applications that were demonstrated for us were Search, Verify, and Predict.

Search – think “Google” for your network. Search devices and behavior within and interactive topology.

Verify – See if your network is doing what you think it should be doing. All policy is applied with some intent, is your intent being met?

Predict – When you identify the need for a change, how can you be sure the change you make will work? How do you know that change won’t break something else? Test your proposed changes against the copy of your network and see exactly what the impacts will be.

Forward Search

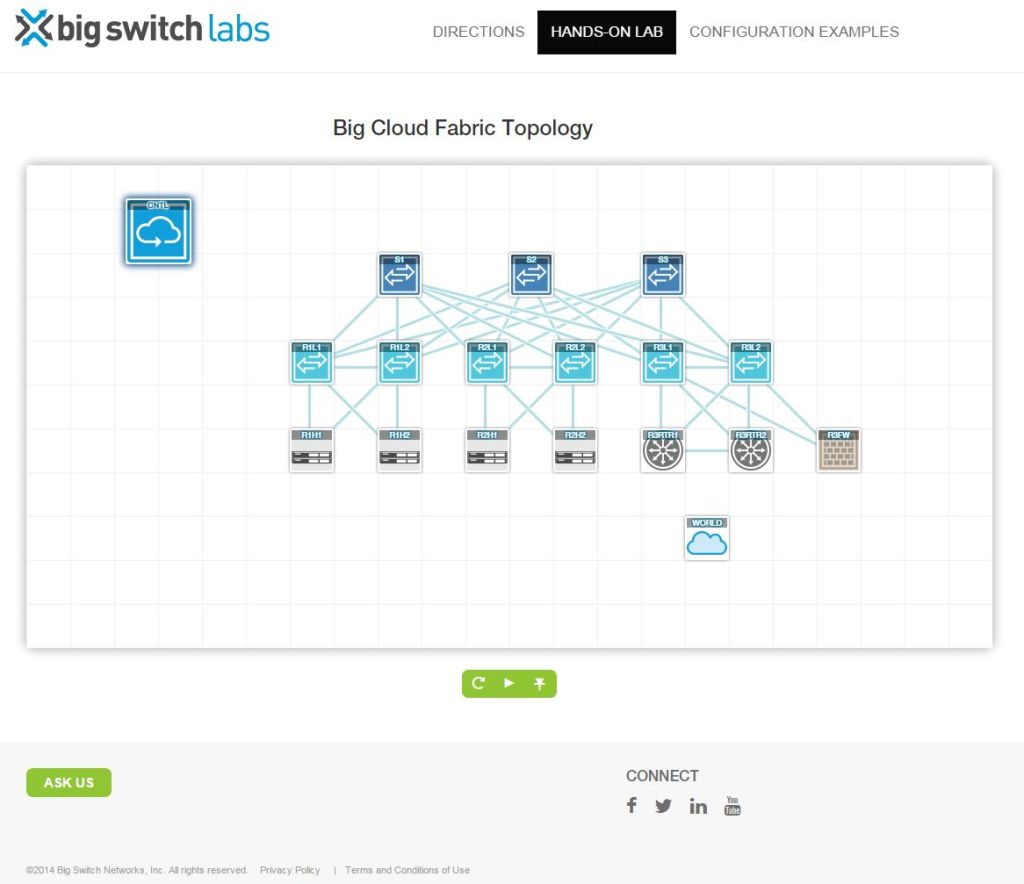

Brandon Heller offered an in-depth demo of these tools, beginning with Search. Looking at a visual overview of the demo network, he was able to query in very simple terms for specific traffic. In this case traffic from the Internet, to his web servers. In a split second, Search zoomed in on a subset of the network topology, showing exactly where this traffic would flow. Diving further into the results, each device would then show the rules or configuration that allowed this traffic across the device in an intuitive step-through menu that traced the specified path through the entire network, and highlighted the relevant configuration or code.

This was all done in a few seconds, on a heterogeneous topology of Juniper, Arista, and Cisco devices.

Normally, tracing the path through the network would require a network admin, with knowledge of each of those vendors, to manually test with tools like ping and traceroute, and also comb through each configuration device-by-device along the path he or she thought was the correct one, in order to verify the traffic was flowing properly.

The response time on the queries was snappy, and Brandon explained this was due to the fact that, like a search engine, everything about the network was indexed ahead of time, making queries almost instantaneous.

Forward Verify

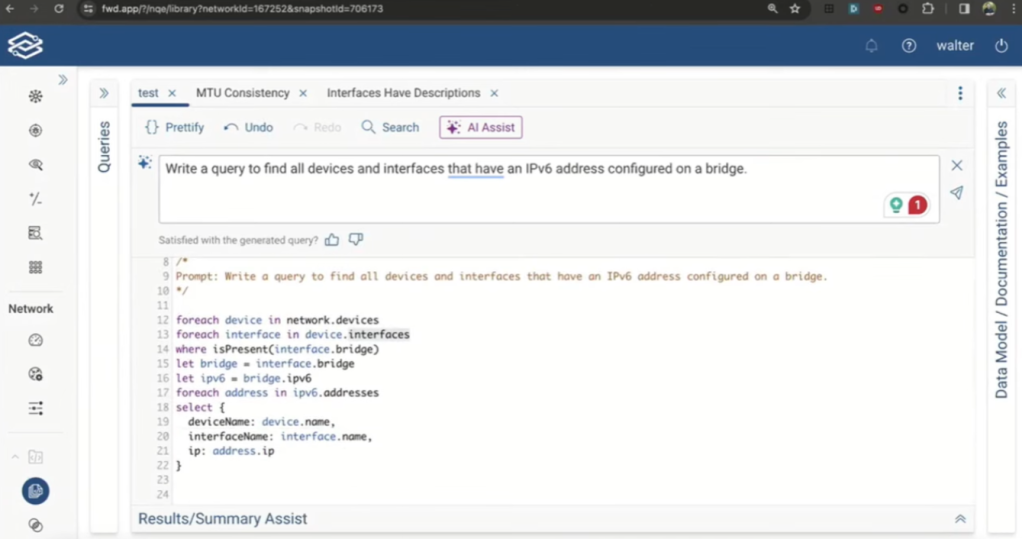

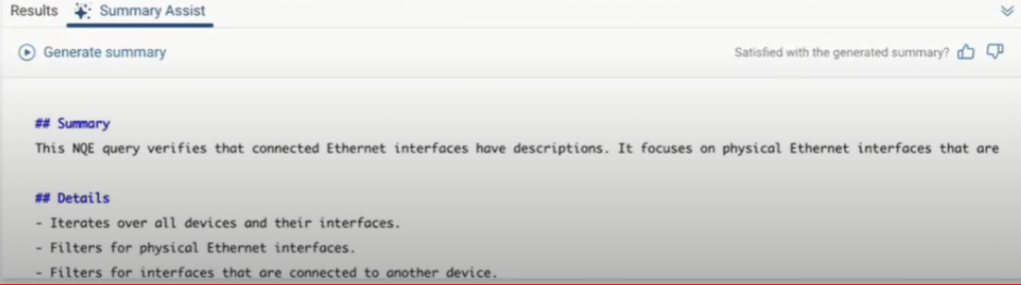

It’s one thing to understand how your network should behave, and another to be able to test and confirm this behavior. Forward Verify has two ways of doing this. The first is a library of predefined checks that identify common configuration errors. Things like duplex consistency, etc. that are fairly common, yet easy to miss configuration errors.

The second is with network-specific policy checks. Here once again, a simple to understand intuitive query verified that bidirectional traffic to and from the Internet could get to the web servers over via http and ssh.

When there is a failure, a link is provided which allows you to drill down into the pertinent devices and their configuration and see where your policy check is failing.

Forward Predict

When a problem is identified or a change to the network configuration is necessary, Forward Predict is the final tool in the suite, and in my opinion, the most important one, as it allows you to test a change against your modeled network to see what impact it will have. This is huge, as typically changes are planned, implemented and then tested in a production environment in a change or maintenance window.

Forward Predict, while it may not eliminate the need for proper planning and implementation, allows you to build and test configuration changes in what is essentially a fully duplicated sandbox model of your exact environment. This is going to make those change windows a lot less painful as you already know what the outcome will be, rather than troubleshooting problems that weren’t anticipated when the changes were planned.

Moving “Forward”

A common sentiment among NFD delegates during this presentation was that Forward Networks’ product did some amazing things, however we wondered if there was an opportunity here to move this product one step further and have it actually implement or make the changes to the network, after the changes have been vetted by Forward Predict.

Forward Adjust, perhaps?

Understandably, this is going to involve a lot of testing, especially in light of the fact that Forward is completely vendor-neutral and touts the ability to work with complex, mixed environments. Making changes in those types of environments adds a lot of responsibility to this platform, and with that comes risk. Risk that most engineers might be a little skeptical to entrust to a single platform.

Time will tell, and I look forward to hearing more about Forward Networks’ development over the upcoming months, and see where the Network Assurance platform takes us.

Check out the entire presentation over at Tech Field Day, including a fantastic demonstration from Behram Mistree on how Forward Verify can help mitigate and diagnose outages in complex, highly resilient networks.